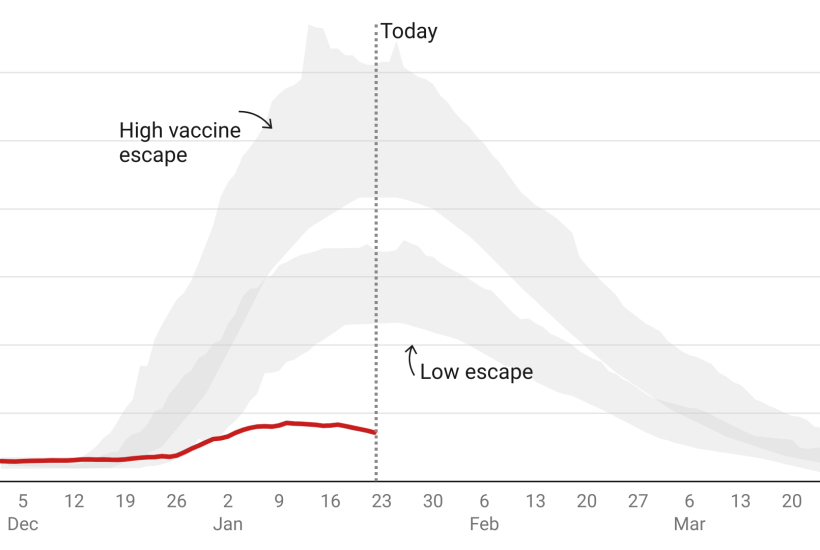

Did we lock down on a false premise? Yesterday was Ben Warner’s turn at the Covid Inquiry. He was an adviser, and one of the ‘tech bros’ brought in by Dominic Cummings to advise No. 10 on data. He was present at many of the early Sage – and other – meetings where the government’s established mitigation (herd immunity) plan was switched to the suppression (lockdown) strategy.

Already a subscriber? Log in

Subscribe for just $2 a week

Try a month of The Spectator Australia absolutely free and without commitment. Not only that but – if you choose to continue – you’ll pay just $2 a week for your first year.

- Unlimited access to spectator.com.au and app

- The weekly edition on the Spectator Australia app

- Spectator podcasts and newsletters

- Full access to spectator.co.uk

Or

Comments

Don't miss out

Join the conversation with other Spectator Australia readers. Subscribe to leave a comment.

SUBSCRIBEAlready a subscriber? Log in