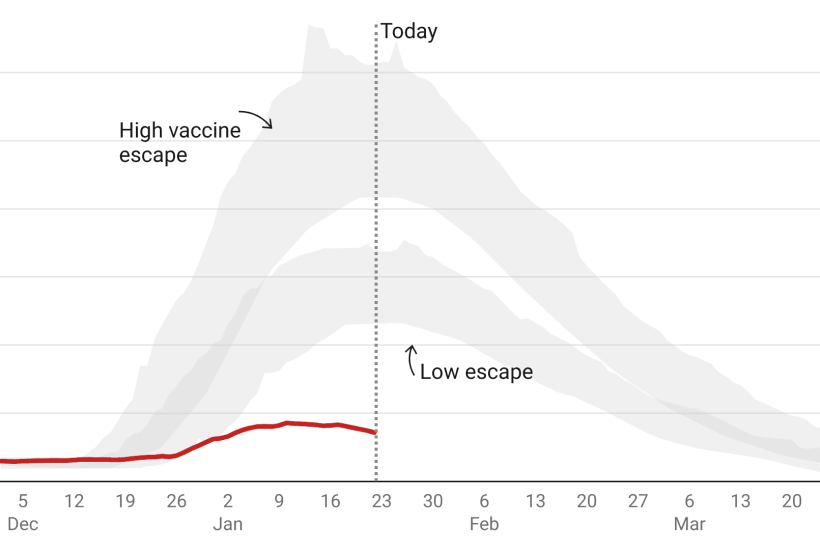

Given that lockdown was very nearly ordered on the advice of Sage last month, it’s worth keeping an eye on the ‘scenarios’ it published, and how they compare to the situation today. Another week of data offers more food for thought. This week was the period when deaths were supposed to be peaking – so given that no extra restrictions were ordered, it’s interesting to compare the peak the models predicted for this week with what actually happened.

Already a subscriber? Log in

Subscribe for just $2 a week

Try a month of The Spectator Australia absolutely free and without commitment. Not only that but – if you choose to continue – you’ll pay just $2 a week for your first year.

- Unlimited access to spectator.com.au and app

- The weekly edition on the Spectator Australia app

- Spectator podcasts and newsletters

- Full access to spectator.co.uk

Or

Comments

Don't miss out

Join the conversation with other Spectator Australia readers. Subscribe to leave a comment.

SUBSCRIBEAlready a subscriber? Log in