Having written (for a Times diary) a few sentences about consciousness in robots, I settled back to study readers’ responses in the online commentary section. They added little. I was claiming there had been no progress since Descartes and Berkeley in the classic philosophical debate about how we know ‘Other Minds’ exist; and that there never would be. A correspondent on the letters page referred me to Wittgenstein’s treatment of the subject and so I studied his remarks. I have to confess they are, to me, unintelligible.

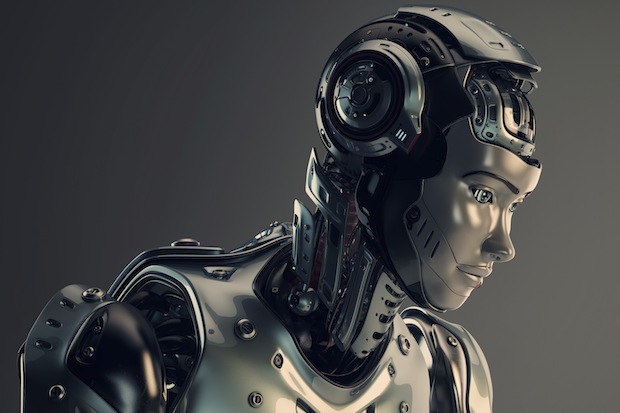

But I cannot let the matter rest. My earlier thoughts had been prompted by newspaper reports of the adventures of a talking, hitchhiking Canadian robot called Hitchbot. Every time such a ‘Whatever will robots learn to do next?’ story gets a public airing, we go the rounds of the same old discussion — a discussion that started in earnest in the 20th century: the debate about whether a machine could ever be so clever and responsive that we might call it ‘conscious’ in the sense we humans think we ourselves are conscious.

The debate never gets anywhere beyond the conclusion that, technologically, we’re a long way from that yet. True enough. But you cannot pursue it for long without becoming aware that the question of whether a machine could have consciousness can only lead to the question of how, if it did, we could know that it did. And this question in turn leads back to the question of how we can know that anything other than ourselves has consciousness. How do we know other minds exist? Maybe Descartes’s cogito ergo sum (I think therefore I am) is all we can know?

And there, it might be thought, the matter must rest. That other minds exist is a working assumption that serves us well. We observe our own responses to the world — to pain, to hunger, to loss — and we note that other humans respond in broadly similar ways. They tell us of their feelings and we empathise. We therefore suppose that what they say and how they respond is actuated by the same consciousness as that of which we can only have direct experience in ourselves.

By analogy with ourselves we assume it’s there. Arguing about whether we could actually prove it is airy-fairy stuff, best left to philosophers in ivory towers and very far from the lives and curiosities of most people. Nevertheless, I insist that it is logically impossible we could ever experience another’s consciousness, because all our experience must come through the portals of our own consciousness.

That’s why I wrote in the Times that what was true three centuries ago must remain true three centuries hence. I now think I was right on the last count (that nothing more will ever be proved) but wrong on the first: that to most people this can only ever be academic. The development of artificial intelligence over the last century, and its further development in centuries to come, will cause us to revisit the question of Other Minds, and will begin to trouble perfectly ordinary people. It is not arcane.

Philip K. Dick in his 1968 science-fiction novel Do Androids Dream of Electric Sheep? was not the first writer, but he was arguably the most engaging, to raise the question of whether artificially created ‘intelligences’ can be conscious, or simply very good at emulating consciousness. In his story we are to assume the latter, although the business of finding out whether apparent humans are actually android is medically very difficult. In the 1982 film Blade Runner, however, based on Dick’s novel (an adaptation of which he approved) it does appear that the ‘replicants’ are capable of human consciousness.

But what tingles the spines of audiences for both stories is the ambiguity, the hovering question, about ‘real’ feelings. The primary plot-line — ‘How do we know?’ — may be the detection of artificial people masquerading as real ones, but the secondary tease is a stranger question: what is it that makes a human ‘conscious’? And can it only ever be simulated, or might it be artificially created? And is consciousness an all-or-nothing attribute, or might there be halfway stages?

My contention is that as machines get cleverer and cleverer, as they become programmable to ‘speak’ to us and respond to us and to what we are doing, we shall be more and more teased by such questions. Any driver who has ever shouted at the satnav lady will know what I mean.

To a degree this may just be the age-old human tendency to anthropomorphise. The Japanese Tamagotchi toy — a sort of electronic egg that you could hatch on-screen, and nurture (or neglect) and influence — may have melted the hearts of millions, but so did inanimate rag dolls. Nobody’s fooled, it’s just that we like to pretend.

But those who could not suppress a tear when R2D2 is badly wounded in Star Wars will surely confess that if they had an astromech droid of their own, they would have toyed with the idea he was not only conscious but kindly. They would mourn his death.

Plainly we are only in the foothills of artificial intelligence. We may look back on the robots of the early 21st century as the developers of today’s heat-seeking surface-to-air missiles look back on the Boy David’s sling. And that’s to speak only of a machine’s technical capabilities: we shall learn, too, to make them lovable, variable, funny, temperamental. We shall learn to make them capable of reproducing more machines, and teaching their offspring things. I cannot believe that as these artificial intelligences gain similarities with their human makers and, most importantly, begin to gain autonomy, even independence, from us — our natural tendency to anthropomorphise will not invest them with scarily human personae.

Finally — and this is the really scary bit — I cannot but believe that when it becomes easy and natural to anthropomorphise machines, we shall ask ourselves with more anguish the ancient question: are we anthropomorphising each other?

Got something to add? Join the discussion and comment below.

Get 10 issues for just $10

Subscribe to The Spectator Australia today for the next 10 magazine issues, plus full online access, for just $10.

You might disagree with half of it, but you’ll enjoy reading all of it. Try your first month for free, then just $2 a week for the remainder of your first year.

Comments

Don't miss out

Join the conversation with other Spectator Australia readers. Subscribe to leave a comment.

SUBSCRIBEAlready a subscriber? Log in