Have you ever wondered what feeds your internet search results on Google, Yahoo, or Bing?

What about question-answering systems such as Google’s Siri, Amazon’s Alexa, or AI language models?

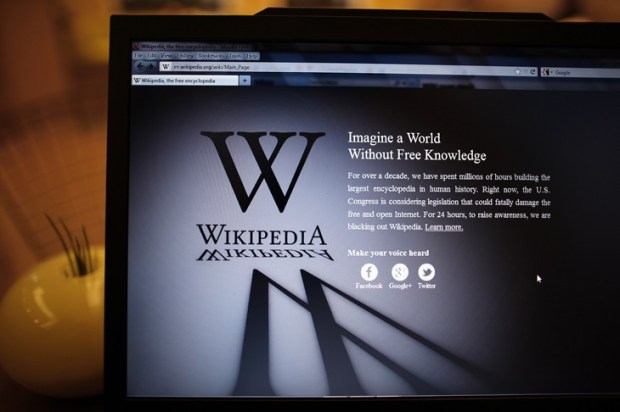

In almost all cases, Wikipedia has a significant role to play as the primary corpus of information feeding the internet.

The success story that is Wikipedia reflects why tech giants such as Google transformed their algorithms to selectively extract Wikipedia’s information to populate its knowledge panels.

Already a subscriber? Log in

Subscribe for just $2 a week

Try a month of The Spectator Australia absolutely free and without commitment. Not only that but – if you choose to continue – you’ll pay just $2 a week for your first year.

- Unlimited access to spectator.com.au and app

- The weekly edition on the Spectator Australia app

- Spectator podcasts and newsletters

- Full access to spectator.co.uk

Comments

Don't miss out

Join the conversation with other Spectator Australia readers. Subscribe to leave a comment.

SUBSCRIBEAlready a subscriber? Log in